Hello, Marcus.

I really appreciate that you made this incredible software. I certainly sure that this plugin becomes an industry standard for a multitude of use cases in the near future.

I am curious about the development direction of Ragdoll Dynamics.

I saw someone who recently posted a post on a forum about Mocap.

https://forums.ragdolldynamics.com/t/mocap-to-ragdoll-simulation-in-live/1195

It was Amazing!! That was my dream. Full - Realtime Animation.

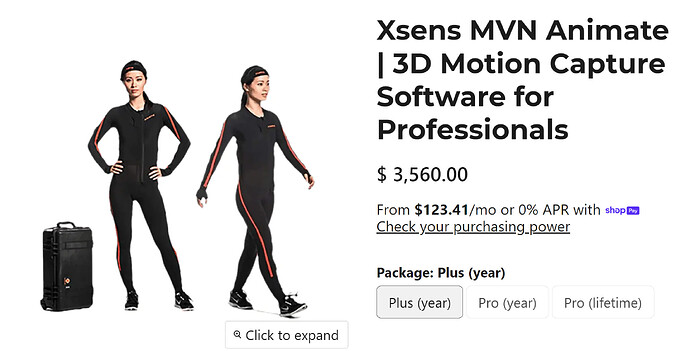

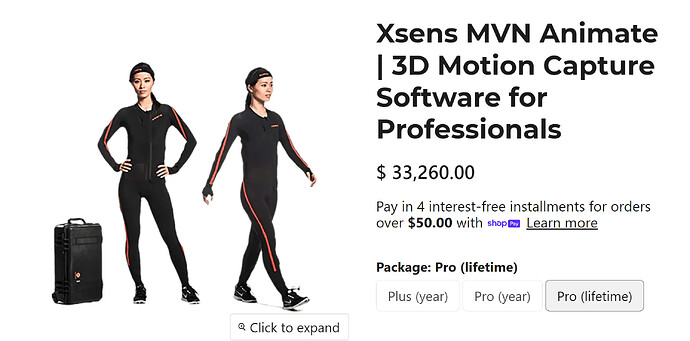

I’d like to try that right away, but unfortunately the problem is that motion capture equipment is quite expensive.

Not only the equipment, but also the software.

Even the software that tracks those motion tracker data and puts them in Maya doesn’t even sell permanent licenses,

sells them with individual dongles license, and continues to pay annual fees of more than a few thousand dollars.

I acknowledge Mocap Developer’s hard work, but I think this is definitely an expensive price that individual animators cannot afford.

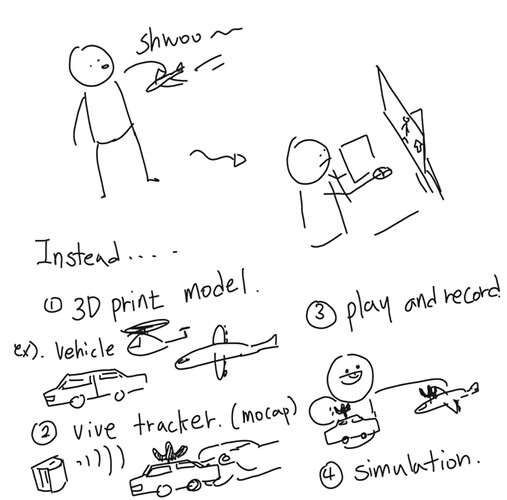

But I’m looking into other solutions.

It is VR tracker equipment.

He’s actually using VR controllers and VR trackers in that Mocop Ragdoll post.

The VR controller is close to 300 dollars,

VR trackers can run up to 7 points of tracking for about 1,100 dollars, including ViVe Base Station 2.0 and Vive Tracker 3.0.

(I am Korean, so I thought of it as my standard, but I don’t know the exchange rate exactly.)

It can still be said to be expensive, but in my opinion, this level is comparable to the accuracy of other existing motion capture solutions and is reasonable in terms of price.

But software is still a problem.

As far as I know, It is impossible to connect that motion capture data directly to Maya without the 10000$ software provided by Mocap companies.

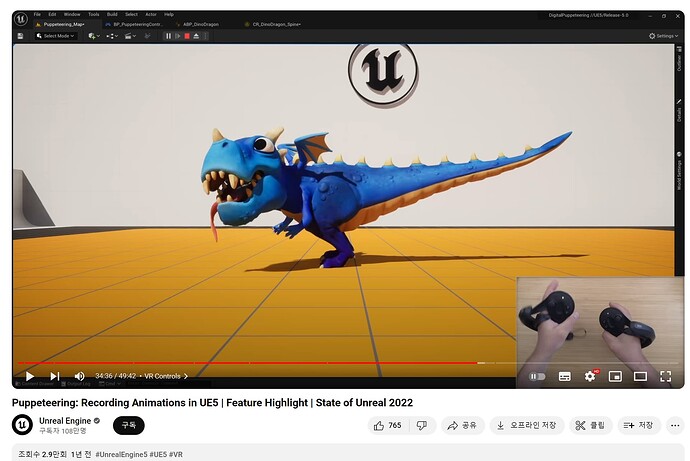

However, there are some softwares that wants to support VR controllers and trackers on its own. It’s a game engine.

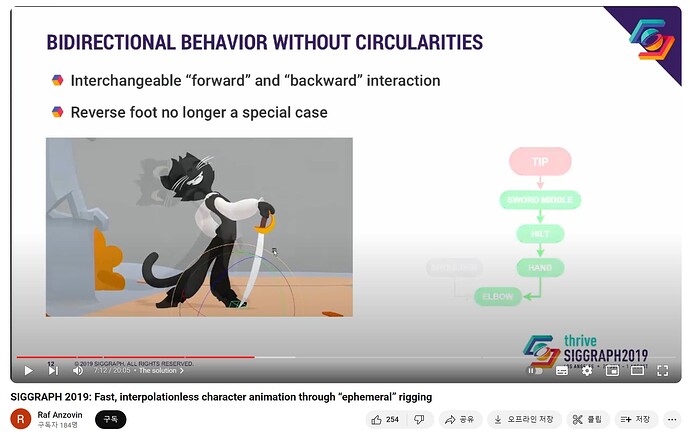

I wonder if you saw this video.

If you haven’t, I hope you watch the video, please.

This is my suggestion, and I think it would be nice to be in the future of this plugin.

https://youtu.be/r1fHOS4XaeE?si=B980lNIk_oSfPLvK&t=2074

https://youtu.be/r1fHOS4XaeE?si=B980lNIk_oSfPLvK&t=2074

You can look around 34 minutes and 34 seconds.

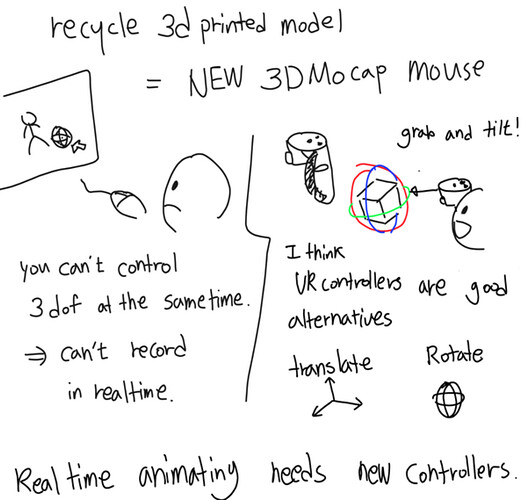

Initially, it is structurally impossible to perform puppeteering with a normal mouse.

I remember what you said when I had a short conversation with you on Discord.

I totally agree with you. It’s rare to find someone who acknowledges and understands the value of live animation this early.

I think your plugin can change the many studios, industries. It can become the foundation for indie animators, and one-only-man animation directors.

I really appreciate you bringing the recording to Maya through Live mode.

If I understand correctly, I was also very impressed by creating a separate timeline buffer to distinguish between simulation and recording timelines.

Combining animating with the Ragdoll simulation is also a really groundbreaking idea.

I’d like to ask if you have any plans to extend this feature by referring to the VR controller provided by Steam VR or the 6 dof data of Vive tracker.

Of course I know this is going to be a really difficult challenge. In fact, I think Autodesk should adopt this plug-in as a new official system, rather than directly acquiring it and turning it into an expanding version by plug-in developers.

However, wouldn’t someone like you, a great software developer, be able to do even this?

Or if I can help you, I think I want to try developing it on your plug-in.

I have Oculus Quest 3 and Vive Base Station 2.0 and Vive trackers and Tundra trackers.

As long as I get trackers data, it would be really easy to connect from Maya to locator. I want to connect it to your simulation timeline in real time and record it.

I’m a student who can handle Python and a little C language.

I’ve been studying how to get this tracking datas in maya these days.

Maya doesn’t seem to have OpenXR API, of course, but… do you think this mocap based ragdoll simulation recording in maya seems impossible?