Hi there,

I was wondering if it could be possible to implement functionality to create markers for simple bone chains in a quicker manner for batch setups

What I had in my mind was to obtain similar results like the Alien rig in your demo solvers in an scenario where we have multiple characters like that with different limb sizes/etc, or for props with many hanging components, things that don’t really require that much tuning like a main character but that require a lot of manual work

I’ll try to describe roughly what I had in mind

-

Specifically I would like to be able to select the root bone of a chain and click on the end of that chain and have a shortcut to assign and connect the whole chain at once without having to select each bone.

-

Better than that it would be to have a shortcut from the root and have the markers progress in the direction of the bone orientations until they meet the end of the chain

-

Ideally it would display a preview of the markers before confirming the setup. Something similar to setting up a Bevel when you are modeling in Blender, you see the result, and scroll up and down with the mouse wheel to change the settings, on this case a scroll could let you progress further in the bone chain, or come back from a chain if it overshooted and you want to set the markers between bones that are intersecting.

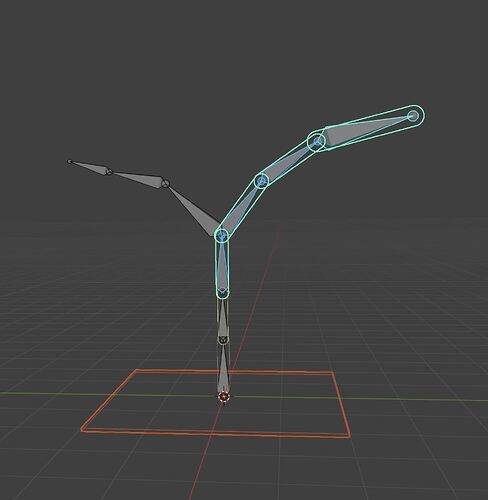

Example of this case:

The chain gets generated but it doesn’t know where to end, it could pick a side, end on an intersection point, or fully chain both (these alternatives could be selected with shortcuts too including scrolling through the selection of individual chains?)

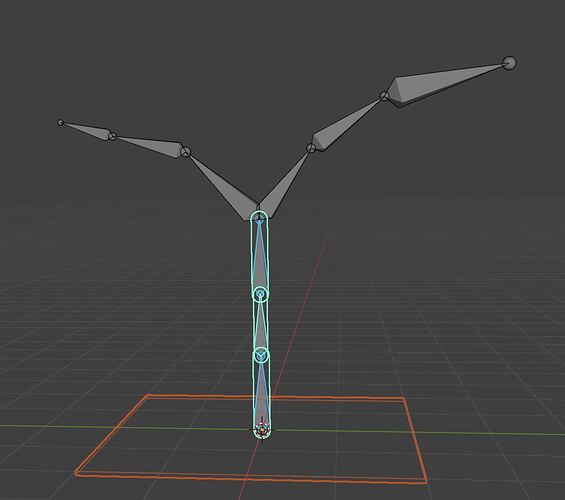

Then with the mouse wheel we can scroll down to deselect across the chain of bones for refirnement:

-

On this preview state you could also offer the possibility to press something like S and scale all of the bones at the same time. Maybe if you press O and scroll the mouse wheel you could offset this factor by making markers at the start of the chain bigger than the end of the chain, if you press F it could flip this offset, making the start of the chain smaller, and the end bigger, controlling the offset factor on this state, then returning to scale making again all the chain and bigger and smaller proportionally with the mouse wheel

-

Another possibility would be to set up all the limits at once for the current chain, all X angles, all Y, all Z. With offset or without offset too (similarly to to modifying the scale on all selected bones at once ) this way the root could have a different limit than the end of the chain to create variations

This kind of workflow could easily solve cases like:

The reason I’ve been thinking a lot about this is because lately I’ve been using very large rigs to have fine tune control over things that are often done procedurally or with simulations.

I’ve tried things from creating world space empties to move things around with proportional editing, but this ignores interactions between bones. To use blender own IK and rigid body solvers with horribly unpredictable and very slow results, to use geometry nodes and having things look… well, procedural, which if we are on this forum by default we know we would like to animate many of the things we often can’t.

And I must say, I understand you guys are working hard on a new rigging schema and that also when users often mentioning something like batch asset processing, automated settings and things of that nature in software it sounds like a lot of this detail oriented workflow misses the point of the originally intended use of the software, or that it asks to account for things that can’t be generalized.

But the reason why I’ve limited the description of the features to basically selections, scales, and offsets is to make the asset processing of less critical parts of the pipeline more efficient to be able to use Ragdoll in scenarios where it would be too time consuming to use otherwise.

Just to give a real example, the last test I ran on a project I immediately regretted it when I realized that I had close to 500 bones across multiple chains. That Bendy bones wasn’t gonna cut it, and that a physical approach was needed while still needing to preserve the capacity to rig and cleanup afterwards

At that point was where I appreciated all the speed optimization mentioned in the news section of the documentation. Ragdoll’s library and those solvers you guys implemented are like alien technology, I have no other way to describe it. I was initially attracted by the live mode and the core functionality of the software but after dealing with all of this I now see why so many have stumbled upon the math wall that is trying to make a quick solver and decided to just walk away and try something else, it’s crazy just how bad the performance of everything else was in light of that scenario but ragdoll was the only thing on the scene performing on real time

Unfortunately, setting up the rig would’ve taken too long so I had to opt for simulation nodes. But if something like this could be implemented a lot of the limitations from blender could be ignored and in a more performant way for things that otherwise couldn’t take advantage of ragdoll for time constraints while still keeping the capacity to fine tune the animation

And of course, by having this as a possibility it would be just way more convenient to set up simple FK chains with no special controllers or dependencies if at the end of the day Ragdoll would handle that part of the animation. Many doors open in the pipeline if this was possible.