Hello @katie, and welcome to the forums!

I can spot a few low-hanging fruits with your attempt so far, but the headline of my response would be that Ragdoll - alongside all pure-physics approaches - is no panacea when it comes to cleaning up motion capture.

But let’s talk about what it can do and then dive into the known limitations so far.

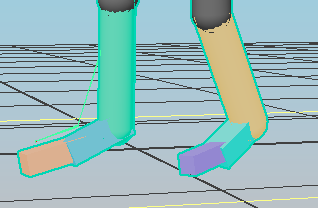

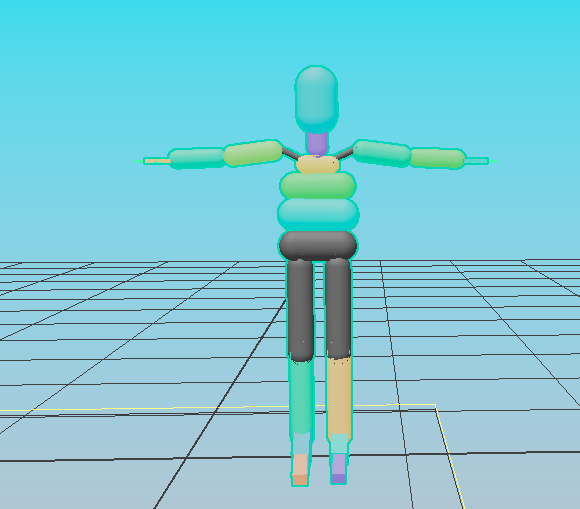

The first things to address in your example is the proportions of your character. It’s important that every child has less volume than its parent. There are exceptions, but generally this will give you the most stable and realistic simulation, and mimics our own anatomy well. Primarily, those clavicles could never sustain the weight of those arms. The rest is not bad, although I’d make the feet wider to get a more stable and flat contact with the ground. The hands and fingers are much much too thin. So in addition to making children smaller, also try and maintain a 1-10x ratio between every Marker. Namely, if the hand weighs 1.0 kg, try to keep children in the 0.1-0.5 kg range.

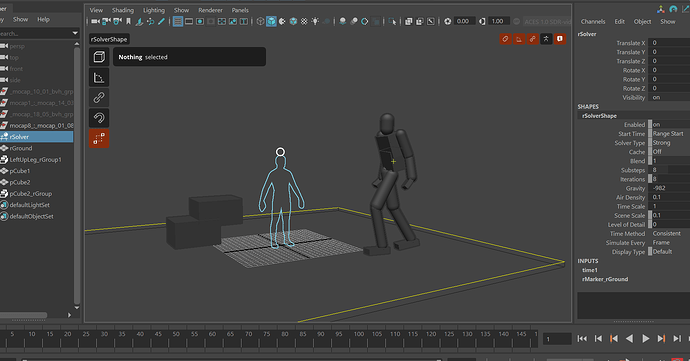

As your file is an .mb, I’m unable to open it on our end for security, I’ve used another CMU clip. Here’s the starting point.

As we can see, it’s able to match the pose, but not retain balance. To retain balance, we have a few options. One of which is to make parts of your character Animated such as in your example. However this means those parts will be unaffected by the simulation and retain any defects, such as jitter.

To affect jitter, we must let the entire character remain Simulated and instead achieve balance via a e.g. Pin Constraint.

By parenting the Pin Constraint underneath the control we’ve assigned it to, it will carry the animation into the simulation similar to the Animated behaviour, but still allow some deviation based on the Pin Constraint parameters.

We’re not there yet though, because the Pin Constraint is clearly pulling the character in every direction. So what if we remove any pull in the Y-axis, letting him fall to the ground whilst still following the walking path?

Better, now what if we try the opposite; of only having it follow Y and not XZ?

Now any forward movement is purely from the motion capture itself, very natural. But it’s still not there, it’s still being pulled via world space force. Like someone reaching into the scene and grabbing the hip.

So what if we attempt to follow - not a worldspace position - but a worldspace rotation only?

Now the character remains upright because the hip is staying upright. But the feet are still getting stuck in the ground, either because the mocap is too close, because our physics shapes are too big. We could attempt to rid ourselves of it by removing friction altogether.

But that just ends up looking slippery and not what we want. So what if we augment the mocap with an animation layer, lifting the leg slightly to let the simulation walk as intended?

Ok, could do a better job animating but hopefully you get the gist. Counter-animating the animation may seem counter intuitive if your goal is to fix the simulation to better match the mocap, but remember the mocap is flawed and the simulation is approximate. Expect to edit both to find a common middle-ground.

Next, things are a little wobbly, so I’ll crank up the stiffness to stick more closely to the mocap orientation, and the damping to make it less springy.

Ok, decent. In this case, we’ve got an invisible environment, so let’s add in the critical elements.

And presto, our result.

There is still much to improve. Some of which can be achieved by tuning the input mocap and simulation parameters, but it is a hard challenge so do not underestimate it.

As a bonus, if you find yourself tuning something towards the middle or end of your simulation and don’t want to continuously replay, try the Ragdoll → Edit → Cache menu item to refresh the current frame whilst you edit.

Limitations

Our result does not perfectly align with the worldspace position of the input mocap. And it could not, not without sacrificing realism. How close it gets depend on, amongst other things:

- The size of the feet, longer feet would get further

- The geometry of the feet, if they are square or round affects where contact happens

- Friction between foot and ground, less friction would allow for more slip, thus reducing the overall distance

- Gravity and other physics parameters; we’re relying on defaults here, but if we take a look at the scale Ragdoll things your character is in, we can see he’s actually a giant.

Pure-physics approaches also do not understand balance. What we’re doing here is one big giant cheat, with worldspace forces that don’t exist in the real world. The closest thing would be being strapped into a harness, like stunt actors have. It adds to the feeling of being fake and must be hidden and tempered to blend in.

That’s why things like foot contacts are such a difficult challenge to solve with pure-physics. The true solution is one that does understand balance and hopefully we can roll out our take on this real soon.

Hope it helps, and let me know if you have any further questions.

PS: Here’s the scene file, made with Ragdoll 2024.05.07

mocap_v003.zip (1.8 MB)